Why voxels for Geospatial and Digital Twins?

Purpose

We built Polaron to serve our own use case needs when we struggled to deploy available Geospatial and Games Industry tools in tera and peta byte scale spatial scenarios:

Games Industry tools perform well in small scenarios (they were designed to do that). They are fast and provide fascinating visuals. But they break down in the scale we operate in or when we flood them with real-time data;

Geospatial tools do cope with the scale but fall behind on features, speed and overall accessibility and look.

Polaron builds in the middle where the gaps is. In voxelized environments we can compress spatial data efficiently while keeping access and traversability robust.

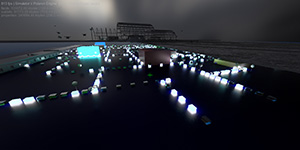

Deployment demonstration in Digital Twins creation

Back-end + integration

We designed Polaron to run mainly at back-end. It is computationally heavy and scales well on GPU clusters. On low end devices (such as smartphones) we just pull data from back-end and display through conventional platforms like Unity. It is easy. Simultaneously we keep building Polaron's rendering pipeline further and our own version of a light weight front-end as well.

Technology

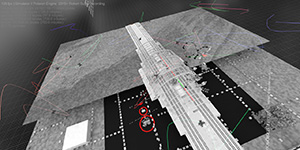

How do we store and process terabytes of spatial data? We organise the scanned RGB + depth info (we get from sensors) in voxel alike spatial data grids. Lossless but very efficient packing accelerated with distance fields at the higher levels in the hierarchy for fast traversal. Optimal balance between compression ratios, memory access/manipulation, and traversal. Ready for physics and volumetric AI.

Point-clouds: The current pipeline injects up to 20 million points per second from depth sensors. While maintaining rendering at interactive rates on a single RTX 3090 GPU. We are not aware of any other technology being capable of the same.

Scene size: max 16k^3 voxel grid on single GPU; max 256k^3 on a GPU cluster (have not yet tested higher but in theory possible).

Info stored with each voxel: Any custom data next to grey, rgb or rgba colour channels. Ready for physics (including fluid dynamics to run flood simulations and other natural disaster scenarios).

Physical materials: reflection, refraction and light scattering.

Rendering: Fully path traced (progressive spatio-temporal reprojection).

Importing from conventional mesh formats: PCD, OBJ, elevation maps, shapefiles, and SVG are already supported.

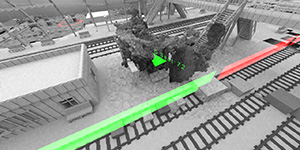

Mapping (SLAM): From sensors such as Monocular (structure from motion) and Stereo (self supervised learning) cameras, Lidar (direct from point cloud). Injected into the volumetric scene straight and in real-time.

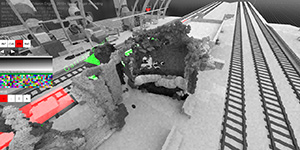

AI: trained on volumertic data, automates the feature detection and annotates the voxels. Noise and error can be manually corrected, easily with the help of the built in editors.

Editing: Currently there are 2 built in visual editors, one for traditional mesh and other shape format importing and transformation, the other for voxel sculpting (add/delete via numerous brushes, change colour and custom properties including annotation, plus a command line consol.

General engine features and interactivity demonstration

Animation stress test (single voxel scene where moving objects' voxels are removed, transformed, and reinjected)

Live sensor data integration from stereo camera (a person walks into the room, waves with the arms, turns, then leaves)